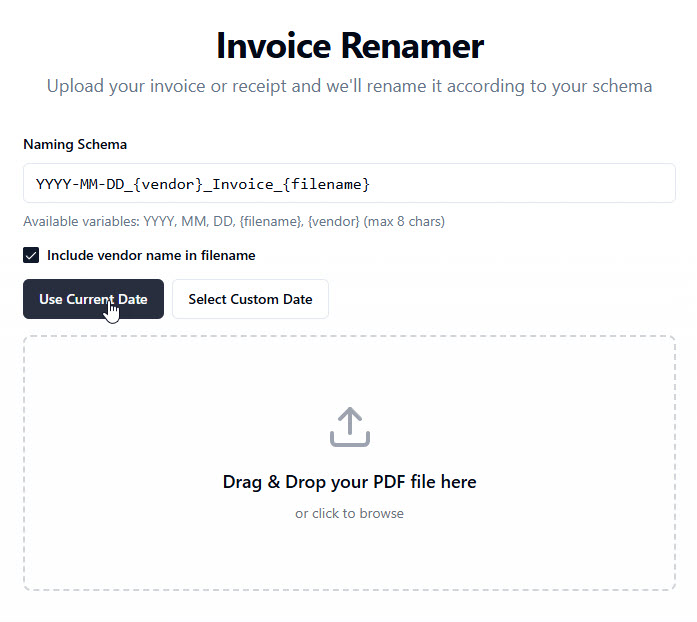

The other day, I built an app without touching a single line of code.

It started with my accountant, who—like clockwork—sent me a long list of missing receipts needed to close the books. If you've ever run a startup or small business, you know this ritual well. You scramble to gather invoices, rename them into something comprehensible, and send them back for processing. It's a mess of filenames with no context.

I wanted a smarter way to rename each receipt with the vendor name, date, and amount. So, I ventured into the world of AI-assisted "no-code" development.

On the recommendation of an investor, I tried Lovable, a tool he’s been using to automate back-office processes at his investment fund. Unlike many no-code platforms, Lovable generates visible code, allowing those who can read and tweak code to fine-tune results.

Four hours and $20 later, I had my app. It ingests PDF receipts and renames them in a structured way that my accountant now loves. In hindsight, I probably could have renamed each file manually in less than four hours—but now I have a tool that will save time in the long run.

This small experience reinforced something important: AI removes barriers. No more excuses like "I don’t have time to learn programming." The same applies to learning human languages. Over the weekend, I spoke with someone working in drug delivery quality control (because in Switzerland, half the people you meet work in pharma, and the other half work in banking). He told me that instead of spending hours translating and reviewing technical documents, he now runs them through DeepL—getting a near-instant, high-quality translation. What used to require fluency and significant time now takes minutes.

So, it should be no surprise that patients are also using AI for managing their health. Some are even pushing the frontiers of AI-driven healthcare.

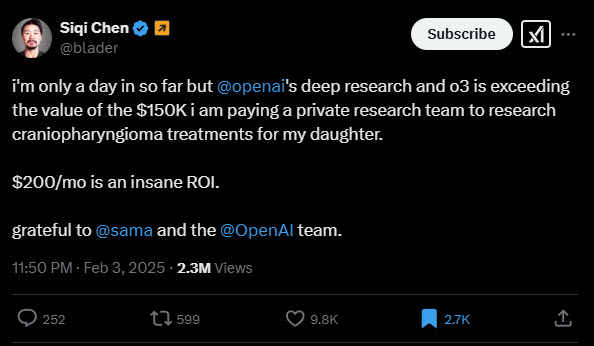

Take this example from a caregiver dad using ChatGPT Pro for deep medical research on behalf of his child:

🔗 Read the thread here.

Later in the thread, OpenAI’s Chief Product Officer joins the discussion—highlighting how even the people building AI tools recognize their transformative potential in healthcare.

If, like me, you learn best by hearing what others have tried, I highly recommend listening to my recent podcast conversation with Jeff TenBroeck, one of our patient experts in atopic dermatitis. Jeff has used AI for years—both as a patient and as a business owner. When he was first diagnosed, he, like many others, turned to Google to understand his condition. But instead of just reading articles, he started having direct conversations with AI.

"Instead of Googling my questions, I’d ask ChatGPT and have a back-and-forth conversation, refining my queries until I got the information I needed," Jeff explained in our latest podcast.

AI isn’t just a search engine replacement—it’s a companion for patients. Instead of passively reading medical information, Jeff uses AI to structure his research:

Preparing for doctor visits – He structures questions to ask his doctor, making the most out of limited appointment times.

Understanding his condition – He asks ChatGPT to break down medical papers into plain language.

Verifying information – He cross-checks AI-generated answers against trusted sources like the National Eczema Association before making any healthcare decisions.

But AI’s power comes with risks. As Jeff warns, AI sounds authoritative—even when it’s wrong. That’s why fact-checking AI responses is essential.

Of course, AI in healthcare isn’t just about convenience—it’s also about responsibility. Ethics in our industry has traditionally been handled by Standard Operating Procedures (SOPs) and codes of conduct. Follow them, use your best judgment, and you generally stay on the right side of ethics.

But AI breaks this ethical comfort zone.

We’re now dealing with semi-autonomous agents, and soon, perhaps fully autonomous ones, influencing clinical studies, drug development, regulatory filings, marketing, and patient interactions. The ethical questions shift from "Did we follow the SOP?" to "Did an AI-driven decision result in harm?"

That’s a topic for a future post.

For now, I’ll leave you with this: AI is already making an impact. The question is, how will you use it?

If you're curious about the AI tools and discussions mentioned in this article, check out the following:

About Jeff TenBroeck

Jeff TenBroeck is a patient expert in atopic dermatitis, entrepreneur, and tech enthusiast. Always on the lookout for smarter ways to manage his condition and business, he experiments with AI-driven solutions to streamline workflows and improve daily life.

About Merakoi

At Merakoi, we believe the best healthcare solutions come from those who live with the conditions every day—patients. We connect life sciences companies with patient experts to co-design better treatments, trials, and healthcare innovations.

AI can crunch your data, but it can’t replace lived experience. Need insights that actually matter? Let's chat!

✕